Evaluation Overview¶

The Evaluation phase of Quality AI improves the customer experience by enabling QA managers to define tailored evaluation criteria for agents that align with each contact centre's operational structure. It supports structured performance assessments across Voice and Chat interactions.

QA managers can configure evaluation forms that collect weighted metrics totalling 100% to assess agent performance. They assign these forms to specific channels and queues for audits, with each queue supporting only one evaluation form. This process provides performance insights, auditing, and Auto QA scoring, aligning evaluations with operational needs to enhance the customer experience.

Evaluation Forms Structure¶

To handle the evaluation criteria more accurately, this evaluation stage includes the following two sections:

-

Evaluation Forms: Weighted configuration of evaluation metrics that define conversation-level scoring criteria.

-

Evaluation Metrics: Individual measurement parameters used for quality assessment.

Key Features¶

-

Multi-language Support: Delivers evaluations to different languages with relevant, localized metrics for accurate global team assessments.

-

Advanced Scoring Options: Enables negative scoring, fatal criteria, and pass-score thresholds to refine evaluations and highlight critical issues.

-

Channel-Specific Configuration: Supports customization of evaluation settings for Voice and Chat channels.

-

Form Scoring Types: Supports percentage-based scoring for simpler forms and points-based scoring for complex evaluations.

Access Evaluation Forms¶

Navigate to Quality AI > CONFIGURE > Evaluation Forms to view and manage evaluation forms.

Evaluation Forms Interface¶

The Evaluation Forms Interface displays the following elements:

-

Name: Shows the name of the evaluation form.

-

Description: Shows a short description of the form.

-

Queues: Shows the forms assigned and not assigned in the queue.

-

Channel: Shows the assigned form channel mode (voice or chat interaction).

-

Created By: Shows the form creator's name.

-

Pass Score: Shows the set pass score percentage for the specified assigned forms and channels. The pass score is the minimum score that an agent needs to pass.

-

Status: Enables or disables scoring for the individual Evaluation Form. Note that you must enable this form to start scoring.

-

Search: Provides a quick search option to view and update the Evaluation Forms by name.

Note

Enable Auto QA in the Quality AI Settings before creating evaluation forms.

Create a New Evaluation Form¶

Steps to create a new evaluation form:

-

Select the Evaluation Forms tab.

-

Select the + New Evaluation Form displayed in the upper-right corner.

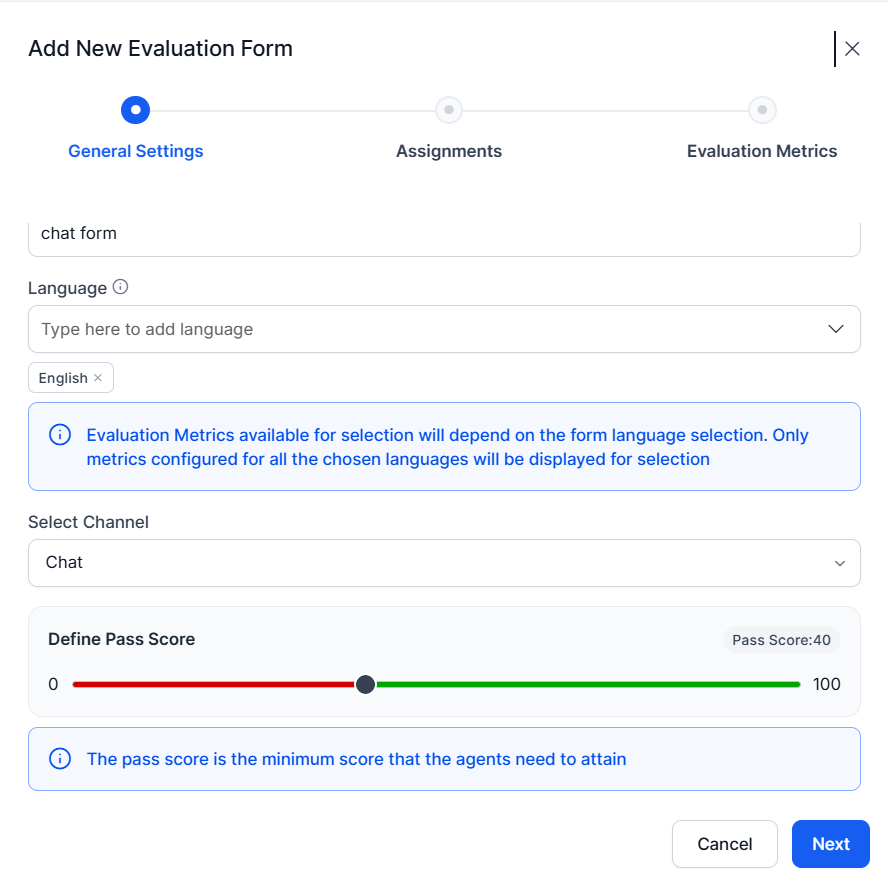

General Settings Configuration¶

This section configures the general settings for the new evaluation form to add and update evaluation metrics relevant to the selected language and channel.

Steps to configure general settings:

-

Enter a Name.

-

Enter a short Description (optional).

-

Select a Channel type. The selected channel determines which metrics are available (Voice or Chat-specific).

-

Chat: Displays only Chat-relevant metrics. Excludes speech-based and Voice-specific Playbook metrics.

-

Voice: Displays all applicable Voice-related metrics, including speech and Playbook metrics.

-

Choose a Scoring Type:

Percentage-Based Scoring: Use the traditional weighted scoring, with metrics totaling 100%. Best for forms with fewer than 20 metrics.

Points-Based Scoring: Use the flexible point allocation with no upper limit on positive points. Ideal for complex forms with 20+ metrics, avoiding fractional weights (for example, 2.5%) across numerous criteria.

-

Set the minimum Pass Score percentage for the agent.

-

Note

To view Agent Scorecards and Agent Attributes, you must enable the Agent Scorecards toggle in Quality AI >Settings.

-

Supports multi-language selection for evaluation forms.

-

Shows only By‑Question metrics that are configured for all selected languages.

-

Applies an AND condition across selected languages, ensuring that only metrics supporting every configured language appear in the dropdown (for example, when English and Dutch are selected, only metrics available in both languages are shown).

-

Assignments Configuration¶

This section lets you select available queues to assign them to the evaluation form.

Steps to configure assignments:

-

From the search list, select a queue available for assignment.

-

Select the required queues, then select Add Queues to assign the assignment.

- Add or remove the listed queue assignments if required.

Queue Metrics by Source

* **CCAI**: Ingests conversation data from CCAI.

* **Agent AI**: Processes interactions from Agent AI.

<img src="../evaluation-criteria/evaluation-forms/images/config-queues.png" alt="Add Queues" title="Add Queues" style="border: 1px solid gray; zoom:60%;">

Form Assignments Rules

-

Each queue can have only one Evaluation Form per channel (Voice or Chat).

-

The system automatically scores interactions when agents handle customer conversations.

-

Calculates scores based on metric outcomes and configured weights.

-

You must enable the form to start scoring.

!!! Note

You can assign only one evaluation form to each queue in the **Chat** and **Voice** channels.

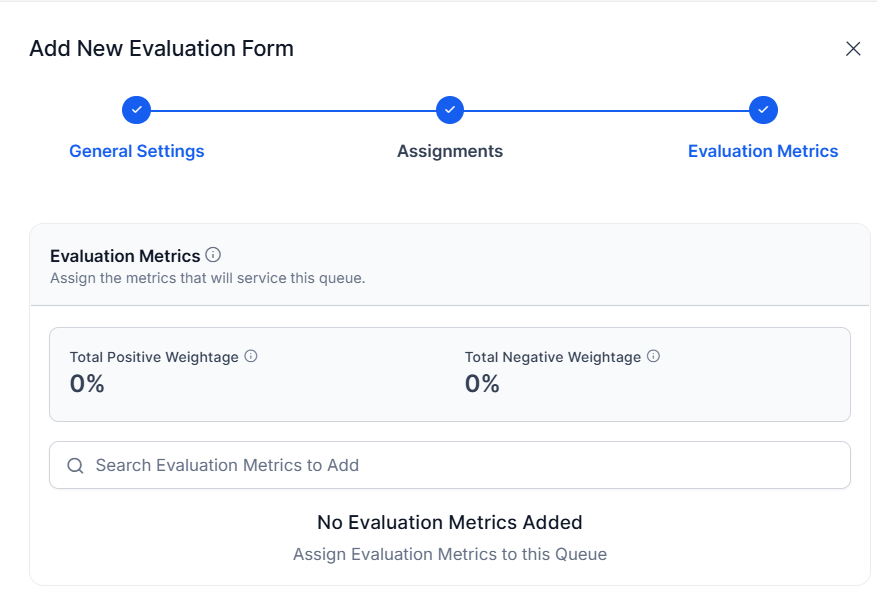

Evaluation Metrics Configuration¶

This section lets you add and create evaluation metrics for each attribute configured and assigned to evaluation forms for the queue, interactions, and agents.

Steps to configure evaluation metrics:

-

Using the Search option, select the required evaluation metrics from the available options.

-

Choose the Evaluation Metrics to assign to the corresponding queues and sources:

-

For CCAI and Agent AI queues, all metrics are available.

-

The system supports only By Question and By Speech metrics for Quality AI Express queues, whether used alone or with CCAI or Agent AI.

-

Select Edit to assign weight to each agent attribute based on importance.

Metric Type Validations by Conversation Source

-

Enables evaluation forms to include queue assignments and validate metric types for the selected conversation source.

-

Enables reordering of metrics after addition to control their display sequence in the AI-Assisted Manual Audit screen.

!!! Note

The metrics list displays only metrics configured for all selected form languages or chosen channels.

-

Choose the Correct Response to identify the correct answer for validation.

-

Enable validation of assigned weight based on the expected response:

-

Assign the Weightage percentage based on the correct response validation.

-

Total Positive Weightage: The sum of all positive metric weight.

-

Total Negative Weightage: The sum of all negative metric weight.

-

Indicates whether the agent’s response or behavior matches the expected standard defined by each metric.

-

Outcome:

-

Yes: When the agent’s response (such as greeting a customer) matches the correct response, the system assigns positive weight to that metric.

-

No: When the agent’s response (such as a rude response) doesn't match the correct response, the system assigns zero or negative weight accordingly.

-

-

-

Toggle the Fatal Error if the metric is fatal and considered a critical failure in the response.

-

Select Create to finalize the form creation.

Advanced Configuration¶

Scoring Logic¶

The system evaluates forms using the weighted metrics assigned to agents. If the total score meets or exceeds the configured pass percentage, the form receives a Pass status. Otherwise, it receives a Fail status. The system calculates the pass score based on the weighted metrics and the priority level assigned to each form by the supervisor.

Configuration Logic¶

The form-level configuration logic determines how the system applies weight validation. It supports both training-based and generation-based adherence detection methods, and the system enforces validation automatically based on the designated Correct Response.

Logic Rules¶

Positive Metrics (Correct Response = Yes)

-

Yes: Used for metrics where Yes represents successful adherence

-

Example: Did the agent greet the customer?

-

Validation: Only positive weights allowed for "Yes" responses

-

Scoring: When agent greets customer = positive contribution to score

Negative Metrics (Correct Response = No)

-

No: Used for metrics where No represents the desired behavior

-

Example: Was the agent rude to the customer?

-

Validation: Only positive weights allowed for No responses; zero or negative weights for Yes responses

-

Scoring: When agent isn't rude = positive contribution to score

Correct Response¶

The Correct Response configuration specifies the expected outcome for each metric and determines how the system validates and applies weights. It supports both positive and negative metrics, enabling flexible scoring logic.

Purpose: The system uses Correct Response to validate adherence in training-based scenarios. This ensures scoring aligns with business goals, whether tracking desired or undesired behaviors.

Weightage Validation Rules¶

-

If Correct Response = Yes: You can only assign positive weights to Yes outcomes, zero or negative weights to No outcomes.

-

If Correct Response = No: You can only assign positive weights to No outcomes, zero or negative weights to Yes outcomes.

Weightage Configuration¶

When editing evaluation metrics, assign weights using either percentage-based or points-based scoring. The scoring system, selected during form creation, determines how the system calculates metric importance.

Positive Weightage Requirements

-

Total positive weights across all metrics must equal 100%.

-

Individual metrics can have positive values up to 100%.

-

Distributed based on metric importance to overall evaluation.

Negative Weightage Guidelines

-

No upper limit validation for negative weightages in configuration.

-

Individual metrics can exceed 100 in setup.

-

Negative weights can collectively exceed 100 across all metrics.

-

Final conversation scores are automatically capped at 100 minimum.

Scoring Calculation¶

The system calculates conversation scores using weighted metrics. If a score goes less than 100, the system caps at 100 to keep scoring consistent.

Error Handling and Logic Enforcement¶

Fatal Error Configuration¶

Fatal Error configuration identifies metrics that are crucial to compliance or functional requirements. When enabled, these metrics can override the entire conversation score regardless of other metric performance.

Fatal Error Criteria¶

The system marks a conversation as a fatal error if any of the following conditions occur:

-

The agent fails to follow the configured process throughout the conversation.

-

The agent behaves harshly or unprofessionally during the entire interaction.

-

The agent skips any safety-critical or mandatory steps.

-

The agent fails to meet a metric designated as a fatal error.

Example: Did the agent provide the mandatory disclaimer in the conversation?

If a required disclaimer is missing, the system marks the chosen metric as a fatal error (No) and flags the entire conversation as a fatal error. The conversation score becomes zero, even if all other evaluation metrics pass.

Use Cases: Compliance requirements, disclaimer delivery, critical functional requirements.

Managing Existing Evaluation Forms¶

This section guides you through the process of updating (editing or deleting) an existing evaluation form.

Edit Existing Evaluation Forms¶

Steps to edit the existing evaluation forms:

-

Select a target evaluation form, and choose any existing forms.

-

Select Next to update the required evaluation metrics fields.

-

Select Next to update the required assignments fields.

-

Select Update to save the modified fields.

Deleting Existing Evaluation Metrics¶

Steps to delete an evaluation metric:

-

Select Delete to display a warning dialog box prompting you to update the weights for the remaining metrics.

-

Update the required metric weights as prompted.

-

Select Next.

Note

Deleting a form results in the irreversible loss of all associated data.

Warnings and Error Messages¶

Language Configuration Warnings¶

This section describes the rules, warnings, and error messages related to adding or removing any languages in the evaluation form based on their metric and form level configurations.

Unsupported Language Error (Form-Level)¶

- If a form supports English and Dutch, and its associated metrics support only these languages, adding Hindi triggers a warning. This occurs because the child (By-Question) metrics don't support Hindi. Before adding a new language, make sure that all metrics in the form support it.

To resolve this, perform the following actions:

-

Review the metric-level configuration for the new language (for example, Hindi).

-

Update all metrics used in the form to support the new language.

-

Add the new language to each metric used in the form.

-

After all metrics support the language, add the language to the form.

Language Limitation on Adding New Language¶

- This warning appears when you use metrics in a form that don't support a language configured at the form level. For example, the form includes Hindi, but some metrics added or updated don't support Hindi.

To resolve this, do the following:

-

Option 1: Configure the required language (for example, Hindi) for the selected metrics at the metric level.

-

Option 2: Choose metrics that support the required language.

Channel Mode Change Warning¶

-

When you switch to any existing or preconfigured channel modes between Voice and Chat, a warning message appears related to the specific channel's associated metrics.

-

The system automatically deletes speech-based metrics when you switch the channel from Voice to Chat or Chat to Voice.

To resolve this, perform the following actions:

Speech Metric Addition Limitation¶

Evaluation forms support only one speech metric per subtype: Crosstalk, Dead Air, and Speaking Rate. Selecting a duplicate subtype in the Evaluation Metrics checkbox triggers an error message.

Note

-

Only one metric of each type you can add at a time.

-

You must remove or delete the existing metric of that type to proceed.